KAYTUS Unveils Upgraded MotusAI to Accelerate LLM Deployment

Streamlined inference performance, tool compatibility, resource scheduling, and system stability to fast-track large AI model deployment.

KAYTUS, a leading provider of end-to-end AI and liquid cooling solutions, today announced the release of the latest version of its MotusAI AI DevOps Platform at ISC High Performance 2025. The upgraded MotusAI platform delivers significant enhancements in large model inference performance and offers broad compatibility with multiple open-source tools covering the full lifecycle of large models. Engineered for unified and dynamic resource scheduling, it dramatically improves resource utilization and operational efficiency in large-scale AI model development and deployment. This latest release of MotusAI is set to further accelerate AI adoption and fuel business innovation across key sectors such as education, finance, energy, automotive, and manufacturing.

This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20250612546292/en/

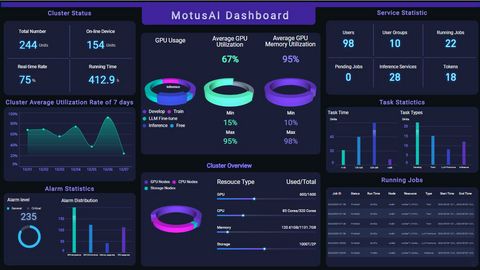

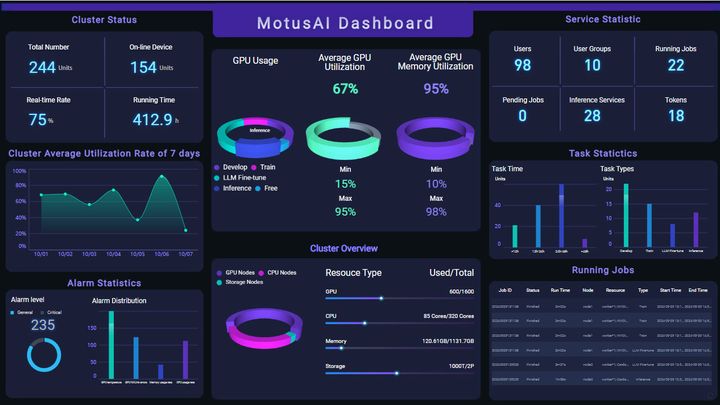

MotusAI Dashboard

As large AI models become increasingly embedded in real-world applications, enterprises are deploying them at scale, to generate tangible value across a wide range of sectors. Yet, many organizations continue to face critical challenges in AI adoption, including prolonged deployment cycles, stringent stability requirements, fragmented open-source tool management, and low compute resource utilization. To address these pain points, KAYTUS has introduced the latest version of its MotusAI AI DevOps Platform, purpose-built to streamline AI deployment, enhance system stability, and optimize AI infrastructure efficiency for large-scale model operations.

Enhanced Inference Performance to Ensure Service Quality

Deploying AI inference services is a complex undertaking that involves service deployment, management, and continuous health monitoring. These tasks require stringent standards in model and service governance, performance tuning via acceleration frameworks, and long-term service stability, all of which typically demand substantial investments in manpower, time, and technical expertise.

The upgraded MotusAI delivers robust large-model deployment capabilities that bring visibility and performance into perfect alignment. By integrating optimized frameworks such as SGLang and vLLM, MotusAI ensures high-performance, distributed inference services that enterprises can deploy quickly and with confidence. Designed to support large-parameter models, MotusAI leverages intelligent resource and network affinity scheduling to accelerate time-to-launch while maximizing hardware utilization. Its built-in monitoring capabilities span the full stack—from hardware and platforms to pods and services—offering automated fault diagnosis and rapid service recovery. MotusAI also supports dynamic scaling of inference workloads based on real-time usage and resource monitoring, delivering enhanced service stability.

Comprehensive Tool Support to Accelerate AI Adoption

As AI model technologies evolve rapidly, the supporting ecosystem of development tools continues to grow in complexity. Developers require a streamlined, universal platform to efficiently select, deploy, and operate these tools.

The upgraded MotusAI provides extensive support for a wide range of leading open-source tools, enabling enterprise users to configure and manage their model development environments on demand. With built-in tools such as LabelStudio, MotusAI accelerates data annotation and synchronization across diverse categories, improving data processing efficiency and expediting model development cycles. MotusAI also offers an integrated toolchain for the entire AI model lifecycle. This includes LabelStudio and OpenRefine for data annotation and governance, LLaMA-Factory for fine-tuning large models, Dify and Confluence for large model application development, and Stable Diffusion for text-to-image generation. Together, these tools empower users to adopt large models quickly and boost development productivity at scale.

Hybrid Training-Inference Scheduling on the Same Node to Maximize Resource Efficiency

Efficient utilization of computing resources remains a critical priority for AI startups and small to mid-sized enterprises in the early stages of AI adoption. Traditional AI clusters typically allocate compute nodes separately for training and inference tasks, limiting the flexibility and efficiency of resource scheduling across the two types of workloads.

The upgraded MotusAI overcomes traditional limitations by enabling hybrid scheduling of training and inference workloads on a single node, allowing for seamless integration and dynamic orchestration of diverse task types. Equipped with advanced GPU scheduling capabilities, MotusAI supports on-demand resource allocation, empowering users to efficiently manage GPU resources based on workload requirements. MotusAI also features multi-dimensional GPU scheduling, including fine-grained partitioning and support for Multi-Instance GPU (MIG), addressing a wide range of use cases across model development, debugging, and inference.

MotusAI’s enhanced scheduler significantly outperforms community-based versions, delivering a 5× improvement in task throughput and 5× reduction in latency for large-scale POD deployments. It enables rapid startup and environment readiness for hundreds of PODs while supporting dynamic workload scaling and tidal scheduling for both training and inference. These capabilities empower seamless task orchestration across a wide range of real-world AI scenarios.

About KAYTUS

KAYTUS is a leading provider of end-to-end AI and liquid cooling solutions, delivering a diverse range of innovative, open, and eco-friendly products for cloud, AI, edge computing, and other emerging applications. With a customer-centric approach, KAYTUS is agile and responsive to user needs through its adaptable business model. Discover more at KAYTUS.com and follow us on LinkedIn and X.

View source version on businesswire.com: https://www.businesswire.com/news/home/20250612546292/en/

Subscribe to releases from Business Wire

Subscribe to all the latest releases from Business Wire by registering your e-mail address below. You can unsubscribe at any time.

Latest releases from Business Wire

Technology Reply earns Oracle Service Expertise in Artificial Intelligence for the Western Europe region13.6.2025 10:00:00 CEST | Press release

Technology Reply, a company of the Reply Group specialising in the design of solutions based on Oracle technologies, has been presented with the Oracle Service Expertise in Artificial Intelligence for the Western Europe region, further consolidating its position as a key partner for AI projects on Oracle Cloud Infrastructure (OCI). This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20250613439196/en/ Thanks to this certification, Technology Reply strengthens its role as a technology partner for companies seeking to enhance their enterprise applications, improve user interaction, and accelerate decision-making processes through artificial intelligence and Oracle technologies. The Service Expertise certification is in recognition of Technology Reply’s technical and design capabilities in building solutions based on OCI’s AI services, including the use of pre-trained models, chatbot development, custom model creation, and the adoptio

Lone Star Announces Sale of novobanco to BPCE13.6.2025 09:00:00 CEST | Press release

Transaction is testament to novobanco’s transformation into one of Europe’s most profitable banks, and trusted partner for Portuguese households and SMEs BPCE brings the strength of a major European banking group to novobanco The acquisition, expected to close in H1 2026, represents a significant vote of confidence in the Portuguese banking sector and the country’s economic future Nani Holdings S.à. r.l., an affiliate of Lone Star Funds (“Lone Star”), today announced that it has signed a Memorandum of Understanding for the sale of Novo Banco, S.A. (“novobanco” or “the bank”), Portugal’s fourth-largest bank, to BPCE, a leading European banking institution, for a cash consideration payable at closing which values 100% of the share capital at an estimated €6.4 billion as of end 2025. This transaction marks the culmination of a multi-year transformation of novobanco since Lone Star acquired 75% of the bank in 2017. Under Lone Star’s stewardship, in cooperation with other shareholders, novo

xFusion Unveils Breakthrough High-Performance Computing Innovations at ISC 202513.6.2025 05:33:00 CEST | Press release

xFusion International Pte. Ltd (xFusion) showcased its latest advancements in high-performance computing (HPC) and sustainable intelligent computing solutions at the International Supercomputing Conference (ISC) 2025, held from June 10 to 13 in Hamburg, Germany. As a global leader in HPC and computing infrastructure, xFusion highlighted its commitment to empowering industries and institutions with cutting-edge technologies that accelerate innovation and digital transformation. This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20250612879566/en/ Arthur Wang Revolutionizing Computing with Innovative Solutions At the Vendor Roadmap Session on June 12, Arthur Wang, Director of xFusion Computing Solution, delivered a compelling keynote titled "Innovative Computing with xFusion." Arthur emphasized the increasing demand for computing power in the age of AI and intelligent agents, as well as xFusion’s role in addressing these challenges.

Kao Releases the Kao Integrated Report 202513.6.2025 04:00:00 CEST | Press release

Kao Corporation (TOKYO:4452) has published the Kao Integrated Report 2025 on its website, offering shareholders, investors, and all stakeholders with a comprehensive overview of the company’s initiatives and strategic direction. Kao Integrated Report 2025 This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20250609991718/en/ The cover visual represents our “moonshot.” It embodies our commitment to achieving the ambitious goal of a sustainable society for future generations. To achieve its Mid-term Plan “K27,” Kao aims to become a unique presence indispensable to someone in the world and is advancing its Global Sharp Top strategy. In FY2024, Kao exceeded its targets by strengthening Return on Invested Capital (ROIC) management and strategically investing to build stronger, more robust businesses. This report offers an in-depth look at Kao’s progress under K27, highlighting how Kao’s dedicated employees—through the Global Sharp Top s

Phase 3 Data for Incyte’s Retifanlimab (Zynyz®) in Patients with Squamous Cell Carcinoma of the Anal Canal (SCAC) Published in The Lancet13.6.2025 00:45:00 CEST | Press release

POD1UM-303/InterAACT 2 is the first and largest global Phase 3 trial evaluating a PD-1 inhibitor in combination with chemotherapy for the treatment of patients with advanced SCAC not previously treated with systemic chemotherapy The trial met its primary endpoint; treatment with retifanlimab in combination with platinum-based chemotherapy (carboplatin-paclitaxel) resulted in clinically meaningful improvements in progression-free survival and overall survival In May 2025, the U.S. Food and Drug Administration (FDA) approved Zynyz® (retifanlimab-dlwr) in combination with carboplatin and paclitaxel and as a single agent for the treatment of advanced SCAC; submissions to other global regulatory agencies are also under review Incyte (Nasdaq:INCY) today announced that primary results from the Phase 3 POD1UM-303/InterAACT 2 trial of retifanlimab (Zynyz®), a humanized monoclonal antibody targeting programmed death receptor-1 (PD-1), in combination with carboplatin and paclitaxel (platinum-base

In our pressroom you can read all our latest releases, find our press contacts, images, documents and other relevant information about us.

Visit our pressroom