Computer scientists show the way: AI models need not be SO power hungry

The development of AI models is an overlooked climate culprit. Computer scientists at the University of Copenhagen have created a recipe book for designing AI models that use much less energy without compromising performance. They argue that a model’s energy consumption and carbon footprint should be a fixed criterion when designing and training AI models.

The fact that colossal amounts of energy are needed to Google away, talk to Siri, ask ChatGPT to get something done, or use AI in any sense, has gradually become common knowledge. One study estimates that by 2027, AI servers will consume as much energy as Argentina or Sweden. Indeed, a single ChatGPT prompt is estimated to consume, on average, as much energy as forty mobile phone charges. But the research community and the industry have yet to make the development of AI models that are energy efficient and thus more climate friendly the focus, computer science researchers at the University of Copenhagen point out.

"Today, developers are narrowly focused on building AI models that are effective in terms of the accuracy of their results. It's like saying that a car is effective because it gets you to your destination quickly, without considering the amount of fuel it uses. As a result, AI models are often inefficient in terms of energy consumption," says Assistant Professor Raghavendra Selvan from the Department of Computer Science, whose research looks in to possibilities for reducing AI’s carbon footprint.

But the new study, of which he and computer science student Pedram Bakhtiarifard are two of the authors, demonstrates that it is easy to curb a great deal of CO2e without compromising the precision of an AI model. Doing so demands keeping climate costs in mind from the design and training phases of AI models.

"If you put together a model that is energy efficient from the get-go, you reduce the carbon footprint in each phase of the model's 'life cycle'. This applies both to the model’s training, which is a particularly energy-intensive process that often takes weeks or months, as well as to its application," says Selvan.

Recipe book for the AI industry

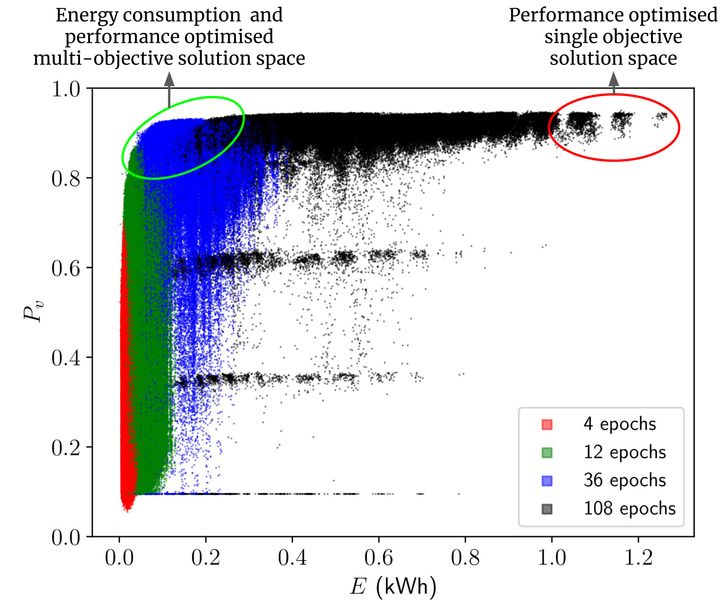

In their study, the researchers calculated how much energy it takes to train more than 400,000 convolutional neural network type AI models – this was done without actually training all these models. Among other things, convolutional neural networks are used to analyse medical imagery, for language translation and for object and face recognition – a function you might know from the camera app on your smartphone.

Based on the calculations, the researchers present a benchmark collection of AI models that use less energy to solve a given task, but which perform at approximately the same level. The study shows that by opting for other types of models or by adjusting models, 70-80% energy savings can be achieved during the training and deployment phase, with only a 1% or less decrease in performance. And according to the researchers, this is a conservative estimate.

"Consider our results as a recipe book for the AI professionals. The recipes don’t just describe the performance of different algorithms, but how energy efficient they are. And that by swapping one ingredient with another in the design of a model, one can often achieve the same result. So now, the practitioners can choose the model they want based on both performance and energy consumption, and without needing to train each model first," says Pedram Bakhtiarifard, who continues:

"Oftentimes, many models are trained before finding the one that is suspected of being the most suitable for solving a particular task. This makes the development of AI extremely energy-intensive. Therefore, it would be more climate-friendly to choose the right model from the outset, while choosing one that does not consume too much power during the training phase."

The researchers stress that in some fields, like self-driving cars or certain areas of medicine, model precision can be critical for safety. Here, it is important not to compromise on performance. However, this shouldn’t be a deterrence to striving for high energy efficiency in other domains.

"AI has amazing potential. But if we are to ensure sustainable and responsible AI development, we need a more holistic approach that not only has model performance in mind, but also climate impact. Here, we show that it is possible to find a better trade-off. When AI models are developed for different tasks, energy efficiency ought to be a fixed criterion – just as it is standard in many other industries," concludes Raghavendra Selvan.

The “recipe book” put together in this work is available as an open-source dataset for other researchers to experiment with. The information about all these 423,000 architectures is published on Github which AI practitioners can access using simple Python scripts.

[BOX:] EQUALS 46 YEARS OF A DANE’S ENERGY CONSUMPTION

The UCPH researchers estimated how much energy it takes to train 429,000 of the AI subtype models known as convolutional neural networks in this dataset. Among other things, these are used for object detection, language translation and medical image analysis.

It is estimated that the training alone of the 429,000 neural networks the study looked at would require 263,000 kWh. This equals the amount of energy that an average Danish citizen consumes over 46 years. And it would take one computer about 100 years to do the training. The authors in this work did not actually train these models themselves but estimated these using another AI model, and thus saving 99% of the energy it would have taken.

[BOX:] WHY IS AI’S CARBON FOOTPRINT SO BIG?

Training AI models consumes a lot of energy, and thereby emits a lot of CO2e. This is due to the intensive computations performed while training a model, typically run on powerful computers. This is especially true for large models, like the language model behind ChatGPT. AI tasks are often processed in data centers, which demand significant amounts of power to keep computers running and cool. The energy source for these centers, which may rely on fossil fuels, influences their carbon footprint.

[BOX:] ABOUT THE STUDY

- The scientific article about the study will be presented at the International Conference on Acoustics, Speech and Signal Processing (ICASSP-2024).

- The authors of the article are Pedram Bakhtiarifard, Christian Igel and Raghavendra Selvan from the University of Copenhagen’s Department of Computer Science.

Keywords

Contacts

Raghavendra Selvan

Assistant Professor

Department of Computer Science

University of Copenhagen

raghav@di.ku.dk

+45 31 87 30 52

Pedram Bakhtiarifard

Master’s student

Department of Computer Science

University of Copenhagen

pba@di.ku.dk

+45 60 21 99 10

Maria Hornbek

Journalist

Faculty of Science

University of Copenhagen

maho@science.ku.dk

+45 22 95 42 83

Images

Links

ABOUT THE FACULTY OF SCIENCE

The Faculty of Science at the University of Copenhagen – or SCIENCE – is Denmark's largest science research and education institution.

The Faculty's most important task is to contribute to solving the major challenges facing the rapidly changing world with increased pressure on, among other things, natural resources and significant climate change, both nationally and globally.

Subscribe to releases from Københavns Universitet - Det Natur- og Biovidenskabelige Fakultet

Subscribe to all the latest releases from Københavns Universitet - Det Natur- og Biovidenskabelige Fakultet by registering your e-mail address below. You can unsubscribe at any time.

Latest releases from Københavns Universitet - Det Natur- og Biovidenskabelige Fakultet

Nyt studie peger på Skagerrak som et slags ”fritidshjem” for den gådefulde grønlandshaj9.7.2025 09:00:00 CEST | Pressemeddelelse

Grønlandshajen – verdens længstlevende hvirveldyr – forbindes oftest med kolde arktiske vande. Et nyt internationalt studie ledt af forskere fra Grønlands Naturinstitut og Københavns Universitet viser dog, at Skagerrak sandsynligvis fungerer som opvækstområde for unge grønlandshajer. Studiet peger også på at grønlandshajer slet ikke fødes i hverken Grønland eller andre steder i Arktis.

Old aerial photos give scientists a new tool to predict sea level rise3.7.2025 08:00:00 CEST | Press release

Researchers from the University of Copenhagen have gained unique insight into the mechanisms behind the collapse of Antarctic ice shelves, which are crucial for sea level rise in the Northern Hemisphere. The discovery of old aerial photos has provided an unparalleled dataset that can improve predictions of sea level rise and how we should prioritise coastal protection and other forms of climate adaptation.

Gamle luftfotos giver forskere nyt redskab til at forudsige havstigninger3.7.2025 08:00:00 CEST | Pressemeddelelse

Forskere fra Københavns Universitet har fået unik adgang til at forstå mekanismerne bag antarktiske ishylders kollaps, som er afgørende for havstigninger på den nordlige halvkugle. Et fund af gamle luftfotos har skabt et enestående datasæt, som kan forbedre vores forudsigelser af hvor meget havene stiger, og vores prioritering af kystsikring og andre klimatilpasninger.

Ny institutleder på IFRO: ”Faglighed og fællesskab går hånd i hånd”1.7.2025 10:49:17 CEST | Pressemeddelelse

Per Svejstrup er fra 1. august ansat som institutleder på Institut for Fødevare- og Ressourceøkonomi (IFRO). Den kommende leder træder ind i rollen med stor respekt for IFRO's faglige og kollegiale kultur med klare ambitioner for fremtiden.

Dangerous Variant of Salmonella Still Not Eradicated – Researchers Point to the Solutions1.7.2025 09:53:23 CEST | Press release

The infectious and multi-resistant cattle disease Salmonella Dublin can be fatal to both humans and animals and causes significant losses for farmers. Although Denmark has attempted to eradicate the disease since 2008, it has not yet succeeded. A study from the University of Copenhagen points to possible reasons – and the necessary solutions.

In our pressroom you can read all our latest releases, find our press contacts, images, documents and other relevant information about us.

Visit our pressroom